In a changing world technology is constantly evolving, so it is necessary to take action to stay tuned about it and bring the best solution to our customers. Every day are found security issues on the operative system[11] and are necessary to do patches, path to libraries, and so on. For this it is necessary to update app images in an easy, standard, performantly, and secure way, because containers are industry standard today.

It is a very common task in development that we have to create a docker image from a source code, and more usual is that the image needs to be ready for a cloud environment. In this case we don't have a specific way to do it, there is no standard, but exists best practices to generate a app images from our source code:

- [Small size image] You need an image with a small size.

- [Multistage] You need to generate the images in two stages or more depending on the language, for example in Go you need a first stage to get all things needed by the app (dependencies, compiler, external libraries) and later when you compiled the source code to later you could copy that binary to a minimized image. (Could be an alpine image with a small size).

- [Reduce Layers] You need to do an Optimize dockerfile, for example take into account how to build the layer to make it more performante[1]. Always we need to reduce the number of layers, design ephemeral containers, avoid storing application data and any other building tools that we don’t need to run or execute the application.

Besides having tools like Docker Linter https://hadolint.github.io/hadolint/ often we spent a lot of time doing the image and refactoring dockerfile to reach a small image size, with the best practices, and more efficient. This need was detected by the pivotal1 and heroku2 approximately in the year 2011 as a result of all the complexity that can be had to set up images without having standards or guidelines. They defined Buildpack3 this concept of buildpacks was broadly adopted by CloudFoundry, Google App Engine, GitLab, Knative, Deis, and more.

Buildpacks from pivotal and Buildpacks from heroku are slightly different, so in 2018 both companies join force to present to the cnfc (Cloud native computing foundation)4 a project based on Buildpacks but not the same.

Once that cnfc approved this project, they created buildpack.io as a standard[5] and coined it “Cloud Native buildpacks”, to unify the buildpack ecosystems from pivotal y heroku and a higher level abstraction for building container images. In the same year Using the knowledge of the many years of experience with buildpacks, the CloudFoundry buildpack engineering team created the Paketo.io project which is based on former CloudFoundry Buildpacks (CNB).

So what are Buildpack / Cloud Native Buildpack? is a kind of app, tool or open source scripts that transforms your application source code into an image, and the image container is more secure. Buildpacks (CNB) ensure apps meet security and compliance requirements by CNCF, perform upgrades with minimal effort and intervention.

Inclusive with less network data transfer because this does the update by layer or stacks. Cloud Native Buildpacks embrace modern container standards, such as the OCI image format and they take advantage of the latest capabilities of these standards, such as cross-repository blob mounting and image layer "rebasing" on Docker API v2 registries[7].

“rebasable” images or image layer “rebasing” is related to ABI compatibility[6] It is the contract provided by OS vendors that guarantees that software doesn’t need to be rebuilt when security patches are applied to the OS layers. It makes “rebasable” image layers safe to rebase. So let you update the Os layer without a risk of sort incompatibility to crash your app.

On the other hand, Is very easy to use when the code is in a good shape or has a good smell. But when it is not, you’ll need to do a refactor of your code. The best way to use Buildpack is with pack:

There are many form of install pack[8] the one that I used was by command line or the tab with the script install where you only need to execute this line with sudo or a user with grant to write on /usr/local/bin and that’s it:

(curl -sSL "https://github.com/buildpacks/pack/releases/download/v0.15.1/pack-v0.15.1-linux.tgz" | sudo tar -C /usr/local/bin/ --no-same-owner -xzv pack) |

To use pack we should have docker installed in our machine, basically pack autodetect what kind of image we need to build looking into the code, based on that pack choose a builder which is an image that bundles all the bits and information on how to build your apps, such as buildpacks, an implementation of the lifecycle[9](a kind of implementation of Cloud Native Buildpacks specs from CNCF) it's still in beta. In summary you have to download or write the code, change directory to the app directory or root path.

# clone the repo

git clone https://github.com/buildpacks/samples

# go to the app directory

cd samples/apps/java-maven |

You could also see in samples/buildpacks/java-maven how is writed the definition or specification (CNB10) with a toml extension file, and how will be apply to choose the right builder, in the directory stack we could see some Dockerfiles with the instructions or recipe with the tools to build an image, every stack has a Dockerfile definition.

And execute the next line:

# build the app

pack build myapp --builder cnbs/sample-builder:bionic |

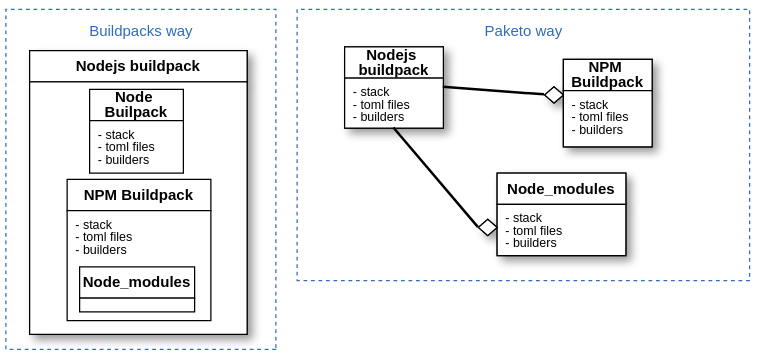

And that’s it, you’ll be using Buildpacks. The buildpacks (CNB[10]) specification and the file structure could be a little complex when the project grows up. You could have Buildpacks inside another Buildpacks definition, long buildpacks files, a lot of toml files in a hierarchical way and a lot Dockerfiles, so in the same time Vmware (Pivotal and CloudFoundry) made paketo where Paketo slogan is Let's Pack, Push and Deploy!

Paketo is a new way to build buildpacks, redefine the relations between the components in a modular way, using composition in dependencies where each element has a single responsibility. This make Buildpacks easy to maintain and to do contribution, on others words is like a refactor from cloudfoundry buildpacks to avoid monolithic buildpacks and Dockerfiles:

written using different programming languages, for different purposes.

Paketo uses a base image called tiny which is effectively distroless and provides a method to more rapidly deploy application code in a way that eliminates the need to create customizations for each deployment platform. This eliminates the need for the container community to reinvent many times the same wheels over again. The separation of concerns they provide, makes the developer’s life easier as they can focus more in the application and product development.

Paketo Buildpacks needs docker and pack installed, the community has done a lot of samples to give it a try ( https://github.com/paketo-buildpacks/samples) and they encourage you to contribute to it. Paketo Buildpacks could be the next generation or an evolution from docker (Dockerfiles) and let us focus on the business problems we’d like to solve. Let's Pack, Push and Deploy!

Referencias.

[1] https://run.pivotal.io/

[2] https://www.heroku.com/

[3] https://buildpacks.io/

[4] https://www.cncf.io/

[5] https://github.com/buildpacks/spec/blob/main/buildpack.md

[6] https://en.wikipedia.org/wiki/Application_binary_interface

[7] https://buildpacks.io/docs/app-journey/

[8] https://buildpacks.io/docs/tools/pack/

[9] https://github.com/buildpacks/lifecycle/releases

[10] Cloud native buildpacks

[11] https://www.researchgate.net/publication/315468931_A_Study_of_Security_Vulnerabilities_on_Docker_Hub

[12] https://blog.codecentric.de/en/2020/11/buildpacks-spring-boot/

[13] https://paketo.io/docs/

Sorry for any grammatical or spelling mistakes

hugs

j3nnn1 (:

https://kubevela.io/ and https://github.com/oam-dev/spec last 2021 was elected as sandbox project from cncf. kubevela keep focus on application approach instead of the implementation. oam is the specification or standard.